Abstract

Rhizomatiks has been developing the “Fencing tracking and visualization system," which is the core technology of the "Fencing Visualized Project," in collaboration with Dentsu Lab Tokyo. It uses AR technology to visualize the tips of swords in motion. Building on various development processes since 2012, the system has been updated to utilize deep learning to visualize sword tips without markers. The system enables detection of sword tips, which human eyes cannot follow, and real time AR synthesis to instantly visualize the trajectory.

This website explains the process of our development from 2012 to the present.

Background

Fencing is one of the oldest sports in the world. Although it is very well-known around the world, most people are not actually familiar with the rules, and have difficulty understanding what is going on when they watch a match.

Project Background

In 2013, Yuki Ota, the first Japanese Olympic medalist in fencing, and Dentsu were planning a research and development project to advance the future of the sports spectating experience using the power of technology.

Given the theme of making fencing easier to understand and more appealing for the audience, Rhizomatiks proposed the idea of visualizing the tips of swords and started prototyping, and the "Fencing Visualized Project" was officially launched.

Rhizomatiks’ Background

Back in 2012, Daito Manabe and Motoi Ishibashi were working on an AR project using motion capture, a high-speed camera, homemade markers, and software to track and visualize the rapid motion of dancers. The project was primarily an attempt to track performers' motion and synthesize graphics into their movements.

That was when the concept of “Fencing x Technology” was suggested, and we came up with ideas for deploying a system that we already knew and used in real projects.

A fencing sword tip is difficult to follow with the naked eye, but we thought that it could be captured with a high-speed camera tracking a small marker on the tip of the sword. We began by conducting a feasibility study.

After the study, we became certain that it was possible to track a sword tip by placing a marker onto it, even with technologies accessible in 2012. We created video footage visualizing the trajectory of a sword tip using AR technology, which was included in a video for Tokyo’s bid to host the 2020 Summer Olympic and Paralympic Games.

In addition, seeing that improvements in machine learning and image analysis technologies would make it highly possible to use the system in real matches without markers on the tip of the sword by the time of the Tokyo 2020 Olympics, we planned and proposed the "Fencing tracking and visualization system," a fundamental technology that uses AR technology to visualize the trajectory of the sword tip, and started research and development.

Thanks to real-time trajectory detection, fencing is now evolving into a sport that everyone can enjoy based on understanding of what is happening in the match.

System

When we started this project in 2013, we used an optical motion capture system with retro-reflective markers to determine the trajectory of sword tips. To appeal to audiences who had not seen fencing before, AR visualization effects were added to the trajectory data in post-production.

Real-time AR visualization using markers on the tips of swords was demonstrated for the first time in front of an audience at the 2014 demo match for the Yuki Ota Cup. Real-time AR was live-streamed in 2017 for NTT DOCOMO FUTURE EXPERIMENT Vol. 2, where ball-shaped reflective markers were replaced with reflective tapes so that fencers could move normally without any restrictions.

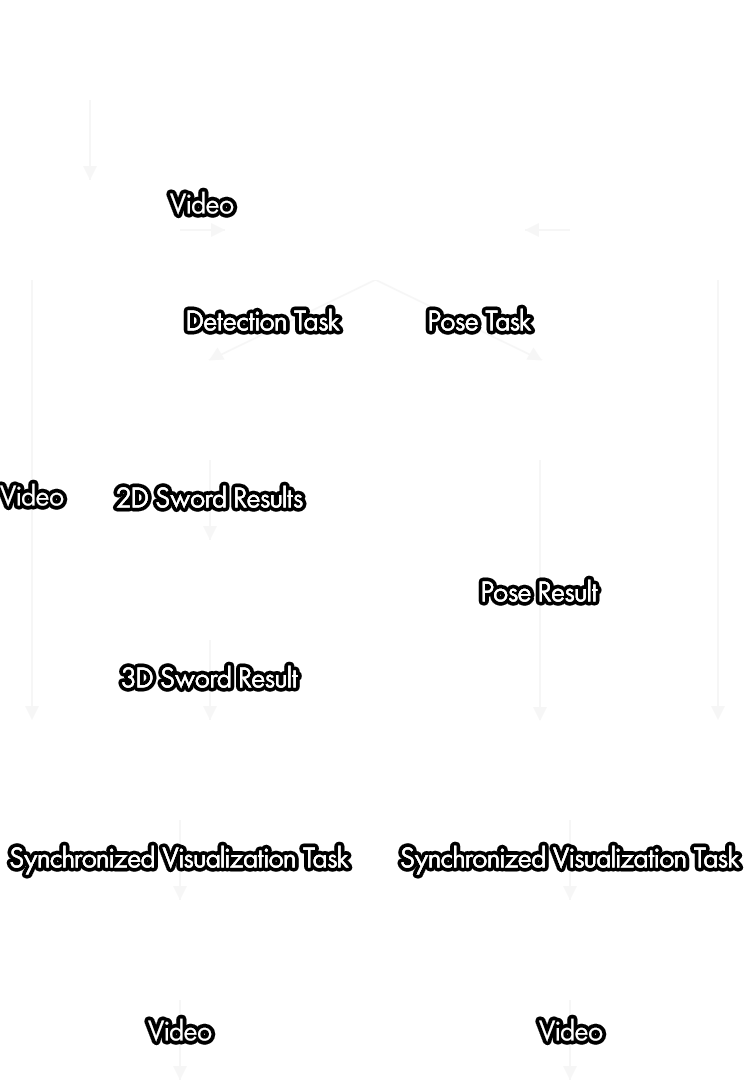

Since 2016, we have started development of a system that can detect sword tip position from camera images alone without causing any inconvenience to fencers, in order to enable introduction of the system in actual matches. The difficulty was that fencing swords move very quickly, and the form of the sword changes greatly as it bends. Additionally, sword tips are only a few pixels in width even when captured by a 4K camera, and this is too small to detect with a traditional image processing approach. These challenges led us to start development of a new deep neural network system for object detection with cascaded classifiers based on YOLO v3, a general deep learning algorithm for object detection, combined with special hardware and camera configuration. Our algorithm succeeded in detecting the position of sword tips with high accuracy. A single camera can only cover 8 meters, but by setting up 24 cameras in total on both sides of the piste, we were able to cover the whole area. Robustness of sword tip detection was also improved by this broader coverage range. With more accurate 2D position estimation from multiple cameras, we were also able to make a 3D position estimation.

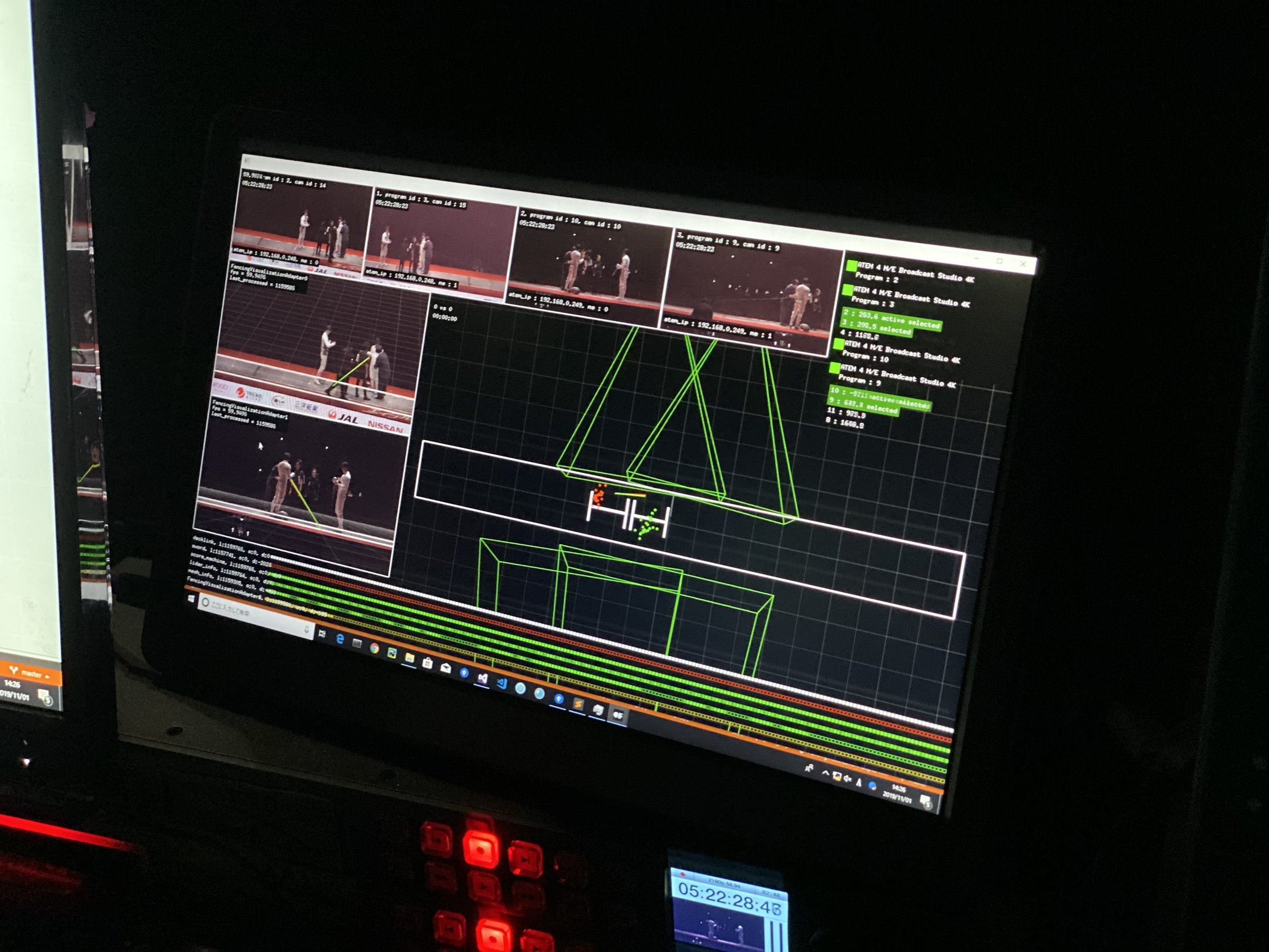

This system was demonstrated in exhibition matches at the 71st All Japan Fencing Championships (2018) and introduced into actual matches for the first time at the 72nd All Japan Fencing Championships (2019), where sword tip detection was updated from 2D to 3D with an elaborate visualization including pose estimation.

Additionally, this introduction to actual matches enabled detection of not only the position of sword tips, but also their angle as well as the position and angle of the sword guard. The AR visuals advanced from 2D to 3D due to the ability to capture the exact shape of the sword, and visualization was drastically upgraded by amalgamating the posture estimation technology mentioned above.

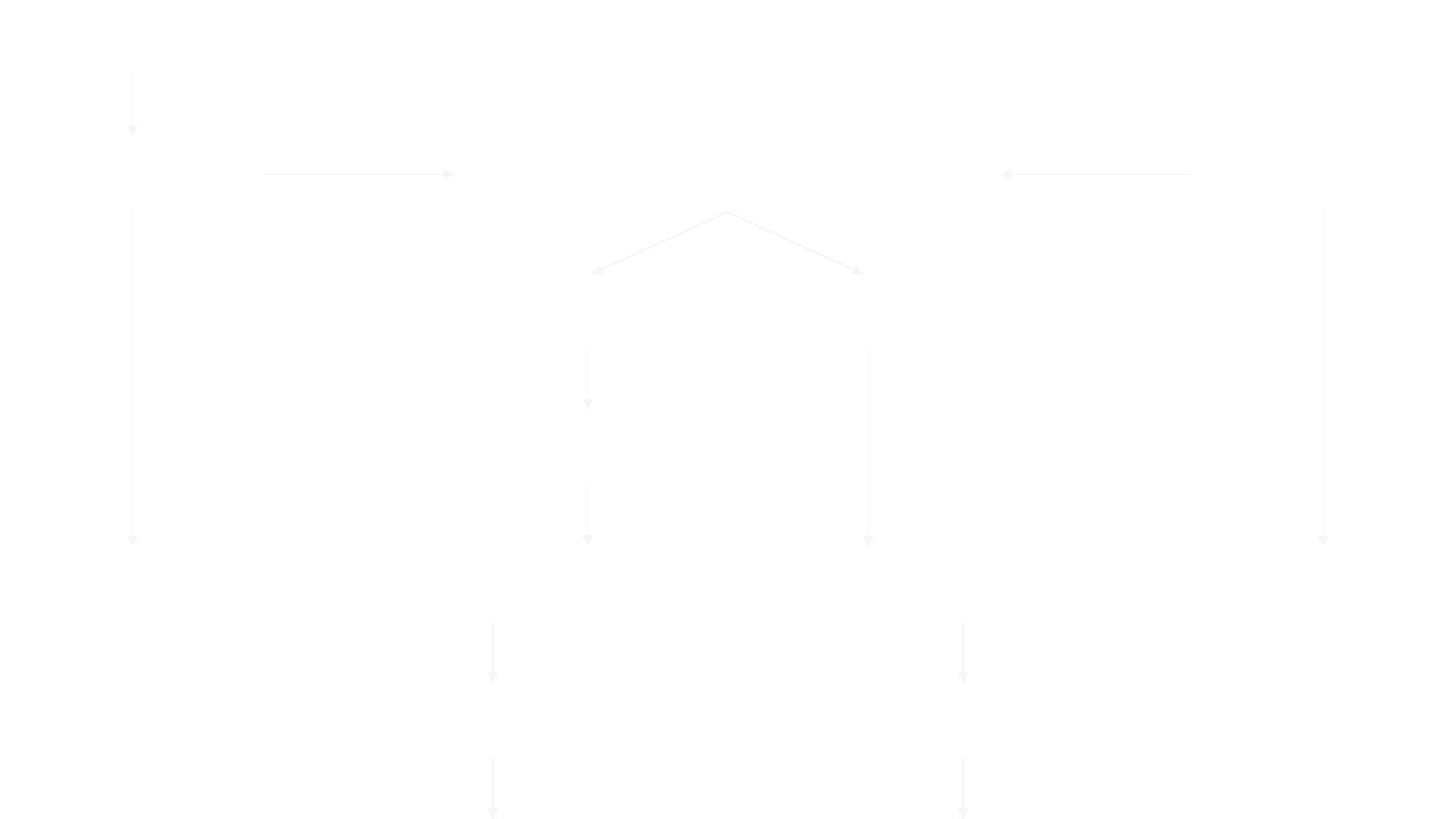

System Architecture for H.I.H. Prince Takamado Trophy JAL Presents Fencing World Cup 2019

Abstraction of 2D sword tip detection algorithm (as of 2018)

Making

2014

Tested AR synthesis of sword trajectory with real-time tracking system using retro-reflective markers.

2016

Started prototyping sword tip tracking without markers. A CV algorithm (for corner detection based on local features) was used instead of markers, but was not successful due to low-accuracy results.

2017

Started a machine learning approach by taking video of a simulated match and preparing a supervised dataset. Sword tip detection utilizing cascaded YOLO v2 classifiers, an algorithm for object detection using deep learning, was a breakthrough approach but at this point still needed quality enhancement for trajectory synthesis.

2018

Started using the YOLO v3 object detection algorithm and succeeded in sword tip detection for 2D images from single camera input source when restricting the tracking area to just the center of the piste. Although a demonstration in an exhibition match at the All Japan Fencing Championships was successful in trajectory generation for simple motions, the quality was not adequate for use in actual matches.

2019

Gathered rich machine learning datasets to increase accuracy in order to introduce the system into actual matches. We shot footage at Kiramesse Numazu using 8 cameras and 12 fencers in several background lighting conditions to annotate and label over 200,000 images.

In addition, more than a million CG datasets were created for data augmentation. This allows use of CG images, which are rendered with different background conditions, sword appearances, lighting, etc., as a training dataset.

Built a miniature set inside a studio to evaluate the 3D sword tip estimation algorithm and focus on development of a system capable of operating in real time, and succeeded in real-time 3D sword tip estimation solely from 2D images.

The 72nd All Japan Fencing Championship

H.I.H. Prince Takamado Trophy JAL Presents Fencing World Cup 2019

Developed a more accurate algorithm and system that works without tracking-area restrictions. Improving sword tip position estimation from 2D to 3D and considering various conditions that are specific to fencing led to a more accurate and robust system that can be used for actual matches. Furthermore, the 3D pose estimation algorithm was integrated into the system, accompanied by major upgrades in visualization styles.

Reference

- [1] Redmon, Joseph and Ali Farhadi. “YOLOv3: An Incremental Improvement.” ArXiv abs/1804.02767 (2018)

- [2] Redmon, Joseph and Ali Farhadi. “YOLO9000: Better, Faster, Stronger.” 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016): 6517-6525.

Credit

Fencing Visualized Project

- Creative Direction

- Kaoru Sugano (Dentsu Lab Tokyo)

- Planning, Creative Direction

- Daito Manabe

- Planning, Technical Direction, Hardware Engineering

- Motoi Ishibashi

- Technical Direction, System Development, Software Engineering

- Yuya Hanai

- Planning

- Kazuyoshi Ochi / Ryosuke Sone (Dentsu Lab Tokyo)

- Produce

- Kohei Ai (Dentsu Lab Tokyo)

Yuki Ota Fencing Visualized project -Technology×Fencing (2013)

- Technical Director

- Daito Manabe

- Technical Support

- Motoi Ishibashi, Yuya Hanai

*Fencing tracking and visualization system by Rhizomatiks Research (Daito Manabe + Motoi Ishibashi)

Yuki Ota Fencing Visualized project –Yuki Ota Fencing Championship (2014)

- Creative Director

- Daito Manabe

- Technical Director

- Motoi Ishibashi

- Programmer

- Yuya Hanai

- Technical Support

- Momoko Nishimoto, Toshitaka Mochizuki, Masaaki Ito

NTT DOCOMO | FUTURE-EXPERIMENT VOL.02 Expanding the Viewpoint (2017)

- Planning Creative Director

- Daito Manabe

- Technical Director Programmer

- Motoi Ishibashi

- System Engineer

- Yuya Hanai

- System Developer

- Ryohei Komiyama

- Visual Programmer

- Satoshi Horii

- System Operator

- Muryo Homma, Tai Hideaki

- System Operator Craft

- Toshitaka Mochizuki, Saki Ishikawa

- Project Manager, Producer

- Takao Inoue

The 71st All Japan Fencing Championship (2018)

- Technical Direction, System Development, Software Engineering

- Yuya Hanai

- Planning, Creative Direction

- Daito Manabe

- Planning, Technical Direction, Hardware Engineering

- Motoi Ishibashi

- Visual Programming

- Satoshi Horii

- Visual Programming

- Futa Kera

- Videographer

- Muryo Homma

- Hardware Engineering

- Yuta Asai

- Hardware Engineering

- Kyohei Mouri

- Technical Support

- Saki Ishikawa

- Project Management

- Kahori Takemura

- Project Management, Produce

- Takao Inoue

The 72nd All Japan Fencing Championship (2019)

- Technical Direction, System Development, Software Engineering

- Yuya Hanai

- Planning, Creative Direction

- Daito Manabe

- Planning, Technical Direction, Hardware Engineering

- Motoi Ishibashi

- Software Engineering

- Kyle McDonald (IYOIYO)

- Software Engineering

- anno lab (Kisaku Tanaka, Sadam Fujioka, Nariaki Iwatani, Fumiya Funatsu), Kye Shimizu

- Dataset System Engineering

- Tatsuya Ishii

- Dataset System Engineering

- ZIKU Technologies, Inc. (Yoshihisa Hashimoto, Hideyuki Kasuga, Seiji Nanase, Daisetsu Ido)

- Dataset System Engineering

- Ignis Imageworks Corp. (Tetsuya Kobayashi, Katsunori Kiuchi, Kanako Saito, Hayato Abe,Ryosuke Akazawa, Yuya Nagura, Shigeru Ohata, Ayano Takimoto, Kanami Kawamura,Yoko Konno)

- Visual Programming

- Satoshi Horii, Futa Kera

- Videographer

- Muryo Homma

- Hardware Engineering

- Yuta Asai, Kyohei Mouri

- Project Management

- Kahori Takemura

- Project Management, Produce

- Takao Inoue

H.I.H. Prince Takamado Trophy JAL Presents Fencing World Cup 2019 (2019)

- Technical Direction, System Development, Software Engineering

- Yuya Hanai

- Planning, Creative Direction

- Daito Manabe

- Planning, Technical Direction, Hardware Engineering

- Motoi Ishibashi

- Software Engineering

- Kyle McDonald (IYOIYO)

- Software Engineering

- anno lab (Kisaku Tanaka, Sadam Fujioka, Nariaki Iwatani, Fumiya Funatsu), Kye Shimizu

- Dataset System Engineering

- Tatsuya Ishii

- Dataset System Engineering

- ZIKU Technologies, Inc. (Yoshihisa Hashimoto,Hideyuki Kasuga,Seiji Nanase,Daisetsu Ido)

- Dataset System Engineering

- Ignis Imageworks Corp. (Tetsuya Kobayashi, Katsunori Kiuchi, Kanako Saito, Hayato Abe, Ryosuke Akazawa, Yuya Nagura, Shigeru Ohata, Ayano Takimoto, Kanami Kawamura, Yoko Konno)

- Visual Programming

- Satoshi Horii, Futa Kera

- Videographer

- Muryo Homma

- Hardware Engineering&Videographer Support

- Toshitaka Mochizuki

- Hardware Engineering

- Yuta Asai, Kyohei Mouri, Saki Ishikawa

- Project Management

- Kahori Takemura

- Project Management, Produce

- Takao Inoue